This is part 1 of a two-part series on durable execution, what it is, why it is important, and how to pull it off. Check out part 2 about how Conductor, a workflow orchestration engine, seamlessly integrates durable execution into applications.

Today, we live in a world where applications have become more complex, with separate microservices for databases, networking, messaging systems, and other core functions like payments or search. As application complexity scales up, more points of failure are introduced into the application’s execution flow.

Failure can happen, and when you are running sufficiently large amounts of workloads, it happens all the time. For any business, guaranteed completion is the necessary baseline for market entry. A search query on Google should eventually return the appropriate search results, and data keyed into a database should persist — just like how going to the supermarket gets you groceries. This means handling failure and edge cases becomes a key business concern, especially for distributed applications where points of failure are much more common.

How can we guarantee that an application flow runs until completion? This guarantee is especially important when it comes to long-running processes, wherein the prolonged runtime introduces greater risk of failure and interruptions. Within that period of time, services may go down, the application code or even the infrastructure may change, leading to interruptions or premature termination. Let’s consider a long-running application flow and all its potential points of failure, within the framework of a state machine.

Applications as state machines

A state machine refers to an abstract system that has a fixed number of states. In a state machine, the system can transition from one state to another at any point in time based on predefined rules and carries out a set of actions associated with the state.

Applications can be conceptualized as state machines, wherein failure can halt the progression of one state to another. In software applications, there are two types of state to consider:

- Business state is about high-level processes.

- Application state refers to granular, execution-level details.

Take an order management system for example:

- The business state would refer to the order status, whether it has been received, processed, or delivered.

- The application state would refer to the execution-level state, such as order validation, invoice generation, and so on.

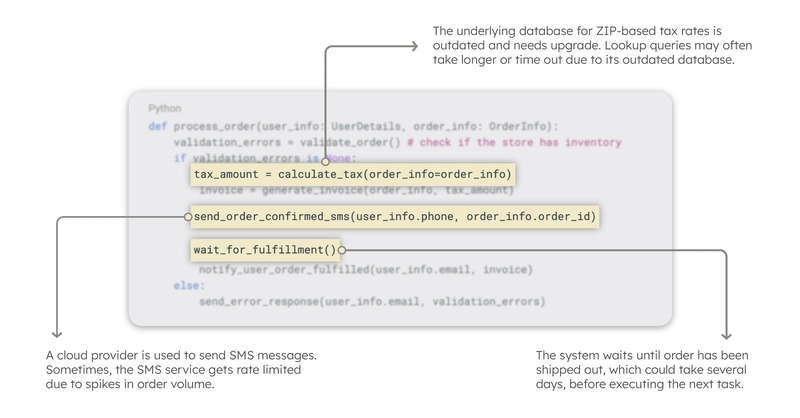

These business states can be mapped to application states. Within a single business state, such as order processing, the system can undergo a series of application state changes, from order validation to shipping notification. Each of these state changes corresponds to a set of tasks – inventory queries, tax calculation, customer notifications:

def process_order(user_info: UserDetails, order_info: OrderInfo):

validation_errors = validate_order() # check if the store has inventory

if validation_errors is None:

tax_amount = calculate_tax(order_info=order_info)

invoice = generate_invoice(order_info, tax_amount)

send_order_confirmed_sms(user_info.phone, order_info.order_id)

wait_for_fulfillment()

notify_user_order_fulfilled(user_info.email, invoice)

else:

send_error_response(user_info.email, validation_errors)

At each juncture, potential for failure arises: the database query may time out, or the cloud SMS service may get rate limited.

Without proper failure handling and state management, these failures cause the system to end up in an inconsistent state. For example, if the wait_for_fulfillment() task times out, the process_order function would fail to complete and the user would not receive the shipping notification. Barring a timely resolution, the system’s state would remain in order processing, even if the order has already arrived at its destination.

The problems continue piling up when you have to restart the application flow from the point of failure and determine which tasks have yet to be completed across an entire network of distributed services. These fixes can amount to numerous lines of additional code, simply for state visibility and persistence, retry policies, and validation checks.

Enter durable execution.

What is durable execution?

Durable execution refers to a system’s ability to preserve its application state and persist execution even in face of interruption or failure. With stateful memory, every task, its inputs/outputs, and the overall progression of the application flow will persist, enabling the application to automatically retry or continue running when faced with infrastructure disruptions.

When applications are built for durable execution, they inherit a number of valuable features:

- Fault tolerance—The application can resume operation from the point of interruption and produce reliable results even if there are failures or crashes on the backend.

- Data integrity—No data is lost even with network failures.

- Resilience for long-running processes—Despite prolonged idle periods, processes can continue for months or years on end and persist indefinitely until completion, even if the underlying infrastructure or workflow implementation has changed.

- Observability—With reliable monitoring data, such as logs and metrics about the system activities, operations teams get full visibility of the application state and behavior.

If durable execution is so important, how can we start developing durable applications? Let’s explore some best practices and patterns.

Best practices for creating durable applications

The key to durable execution is to separate state management from your application code. Here are some best practices for building applications with durable execution:

- Use workers instead of API endpoints.

- Write tasks as stateless services.

- Encode resiliency patterns separately.

Use workers instead of API endpoints

There are cases when you should implement your application functions using poll-based task workers rather than API endpoints.

Workers tend to be more lightweight when it comes to infrastructure and security issues, and are better at handling back pressure and rate limits compared to API endpoints, which makes it easier to implement durable execution. On the other hand, to deploy API endpoints that provide reliable service, you would have to also think about additional infrastructure issues like API gateways, load balancing, DNS, and so on. The infrastructure complexity that comes with API endpoints means that failure handling, load performance, and retry policies are much trickier to get right compared to worker-based task queues.

Furthermore, all these API calls will still have to be orchestrated into a functioning application flow, which if not designed properly, could end up as a spaghetti code full of dependencies. If your functions are designed as API calls that only serve to be called through an orchestration layer, it may be worthwhile to consider using workers instead.

Write tasks as stateless services

When writing tasks, they should be stateless services rather than stateful. Stateless functions are much easier to reason with, because they do not encode the application state or logic within its code. Instead, each task is designed with well-defined input/output parameters, where external dependencies or state can be passed into the task as needed. This prevents the system from entering an inconsistent state upon encountering an error or interruption.

With stateless services, it becomes much easier to write tests, make changes, or reuse code. These advantages also mean that it is much easier to deploy and scale your application according to demand, thereby avoiding failures due to inadequate resources.

To create well-designed stateless services, you can write your tasks with the following features:

- Immutability—Inputs do not get modified and new values are created and returned by the function to prevent unwanted side effects in case of interruption or failure.

- Idempotency—As long as the same input parameters are given, the function result will remain the same no matter how many times the function is invoked.

- Atomicity—The operation completes fully without interruption by any changes in the system state.

Encode resiliency patterns separately

Resiliency patterns define the behavior of your code when errors occur, such as timeout settings, resource redundancy, circuit breakers, load balancing, retry policies, and so on. These rules should be abstracted away from your application code and made modifiable at runtime to maximize code reuse, configurability, and ease of testing.

- Code reuse—Common resiliency patterns can be reused across multiple application projects, reducing duplication.

- Configurability—Resilience parameters, such as circuit breaker threshold or retry count, can be dynamically adjusted to prevent cascading failures and to minimize downtime.

- Ease of testing—Your application’s resilience mechanisms can be tested and validated separately, without having to test the entire application flow.

Using stateful platforms for durable execution

Stateful platforms encode durable execution by default into your applications. Instead of having to build for durable execution yourself, these platforms allow you to quickly create workflows with state management and failure handling by default. In other words, they facilitate a clear separation between application code and state management, which is key to efficiently achieving durable execution.

With so many options, which platform should we use? Here’s a handy checklist for choosing the right platform:

- Can I monitor and debug my flows during production at scale?

- Can I analyze performance, latency, and failure patterns in my flows?

- Can I quickly identify the location and cause of failures within complex flows?

- Can I write my workflow code in the language and framework of my choice?

- Can I build very complex flows?

- How about tens of thousands of parallel forks in a single execution?

- What about running millions of concurrent executions?

- Can I implement patterns like circuit breakers and caching without worrying about implementation details?

- Can I safely version my flows without adding tech debt?

- Can I safely re-run the same flow over and over again?

- Can I automatically document and visualize my flows?

Ultimately, the right platform should allow you to focus on writing code that drives the business forward, instead of code that maintains the nuts and bolts of the underlying infrastructure.

Conductor is a workflow orchestration platform that abstracts away the complexities of underlying infrastructure and empowers developers to rapidly build applications. Having learned about durable execution and how it works, you can read more about how Conductor delivers resilient systems out of the box in the next article.

Orkes Cloud is a fully managed and hosted Conductor service that can scale seamlessly according to your needs. When you use Conductor via Orkes Cloud, your engineers don’t need to worry about set-up, tuning, patching, and managing high-performance Conductor clusters. Try it out with the free Developer Playground.

Top comments (0)